Events and Streams in Node.js

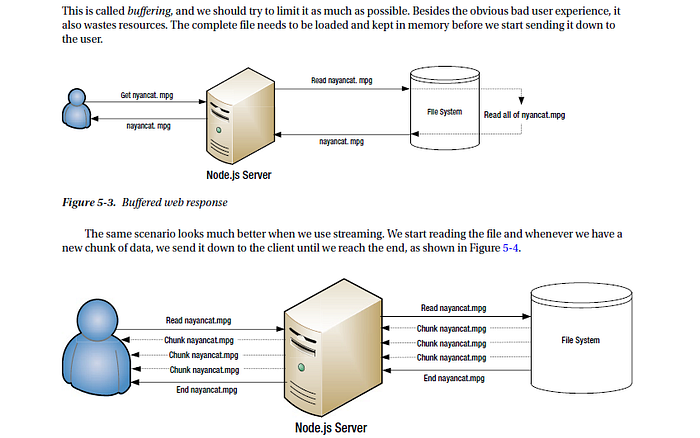

Traditional way of reading input and writing response involved file loading in memory which would consume much more memory and it would be waste of resources. Node.js has its async nature all around pretty much it would be considered as replacement option for this cumbersome method.

Node.js Events

An event is like a broadcast, while a callback is like a handshake. A component that raises events knows nothing about its clients, while a component that uses callbacks knows a great deal. This makes events ideal for scenarios where the significance of the occurrence is determined by the client. Maybe the client wants to know, maybe it doesn’t.

Node.js comes with built-in support for events baked into the core events module. As always, use require(‘events’) to load the module. The events module has one simple class “EventEmitter”, which we present next.

EventEmitter Class

Subscribing an event would be like :

events/1basic.jsvar EventEmitter = require('events').EventEmitter;

var emitter = new EventEmitter();// Subscribe

emitter.on('foo', function (arg1, arg2) {

console.log('Foo raised, Args:', arg1, arg2);

});// Emit

emitter.emit('foo', { a: 123 }, { b: 456 });

Creating instance with simple EventEmitter call , using ‘on’ to subscribe which has passed arguments as - (i) string which is event name (ii) listener(callback function). Then at last ‘emit’ function is used which can be followed by any number of arguments. This was of subscribing an event.

Unsubscribing an event would look like:

var EventEmitter = require('events').EventEmitter;

var emitter = new EventEmitter();var fooHandler = function () {

console.log('handler called');// Unsubscribe

emitter.removeListener('foo',fooHandler);

};emitter.on('foo', fooHandler);// Emit twice

emitter.emit('foo');

emitter.emit('foo');

Here we wrote unsubscribing block of code ahead of triggering event for removing listeners attached to it. Note that second event raised goes unnoticed as at first approach event raised was of unsubscribing listener from it.

Applying Multiple listeners to same events

...

eventEmitter.on('randomString', function (randomStr) {

console.log('Listener 1 received: ' + randomStr)

})

eventEmitter.on('randomString', function (randomStr) {

console.log('Listener 2 received: ' + randomStr)

})

eventEmitter.emit('randomString', randomString())

...It will be printed as —

Listener 1 received: NodeJs

Listener 2 received: NodeJsIf we want to raise an event once only then , eventemitter provides a function ‘once’ that calls registered listener once. Example :-

events/once.jsvar EventEmitter = require('events').EventEmitter;

var emitter = new EventEmitter();emitter.once('foo', function () {

console.log('foo has been raised');

});// Emit twice

emitter.emit('foo');

emitter.emit('foo');

Event listener for foo will be called only once.

Removing and adding new listeners

EventEmitter instances also raise a `newListener` event whenever a new listener is added and `removeListener` whenever a listener is removed, which can help you out in tricky situations such as when you want to track down the instant an event listener is registered/unregistered.

var EventEmitter = require('events').EventEmitter;

var emitter = new EventEmitter();// Listener addition / removal notifications

emitter.on('removeListener', function (eventName, listenerFunction) {

console.log(eventName, 'listener removed', listenerFunction.name);

});emitter.on('newListener', function (eventName, listenerFunction) {

console.log(eventName, 'listener added', listenerFunction.name);

});function a() { }

function b() { }// Add

emitter.on('foo', a);

emitter.on('foo', b);// Remove

emitter.removeListener('foo', a);

emitter.newListener('foo', b);

Note that if you add a `removeListener` after adding a handler for `newListener`, you will get notified about the `removeListener` addition as well, which is why it is conventional to add the removeListener event handler first as we did in this sample.

Pipe and Streams in Node.js

Before moving on to streams, its very much to get know about pipe

Pipe

All streams are instances of EventEmitter. They emit events that can be used to read and write data. However, we can consume streams data in a simpler way using the pipe method.

So this is one of the things that make streams in Node.js so awesome. Its like considering scenario where loading of a file from the file system and streaming it to client.

It can be done by using core module of node.js for file reading and writing known as fs module .

Mainly used for providing utility functions to create readable and writable streams from a file.

var fs = require('fs');// Create readable stream

var readableStream = fs.createReadStream('./cool.txt');// Pipe it to stdout

readableStream.pipe(process.stdout);

For most purposes, pipe is all that you need to know about as an API consumer, but it is worth knowing more details when you want to delve deeper into streams.

Streams in node.js

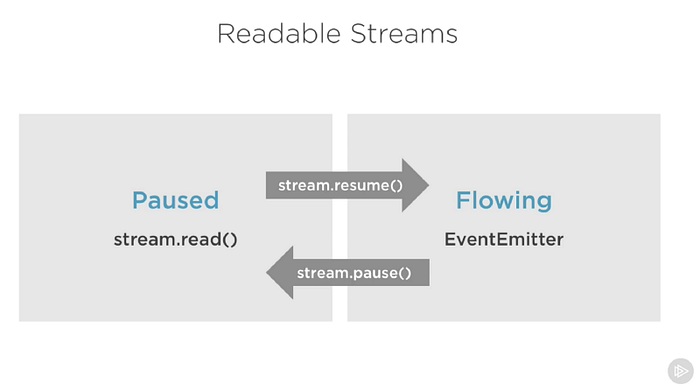

Streams in Node.js are based on events. All that the pipe operation does is subscribe to the relevant events on the source and call the relevant functions on the destination.

Readable Streams

process.stdin.on('readable', function () {

var buf = process.stdin.read();

if (buf != null) {

console.log('Got:');

process.stdout.write(buf.toString());

}

else {

console.log('Read complete!');

}

});This event is raised whenever there is new data to be read from a stream. Once inside the event handler, you can call the read function on the stream to read data from the stream.

The most important events on a readable stream are:

- The

dataevent, which is emitted whenever the stream passes a chunk of data to the consumer - The

endevent, which is emitted when there is no more data to be consumed from the stream.

Source-Samer Buna’s Blog

Writing to Writable Streams

var fs = require('fs');

var ws = fs.createWriteStream('message.txt');ws.write('foo bar ');

ws.end('bas');

In this sample, we simply wrote foo bar bas to a writable file stream.

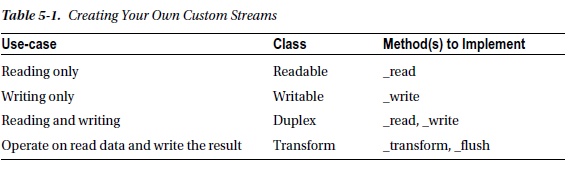

About creating custom streams

Difference between fs.readFile() and fs.createReadStream()

fs.readFile() loads file and reads it into memory and then writes it to response

where as fs.createReadStream() sends chunks of file like writing small chunks of file dividing entire process into that chunk size of file writing which reduces memory load and memory wastage/garbage reduction.

The most important concepts are that of Readable streams, Writable streams, Duplex streams, and Transform streams. A readable stream is one that you can read data from but not write to.

A good example of this is process.stdin, which can be used to stream data from the standard input. A writable stream is one that you can write to but

not read from. A good example is process.stdout, which can be used to stream data to the standard output.

A duplex stream is one that you can both read from and write to. A good example of this is the network socket. You can write data to the network socket as well as read data from it. A transform stream is a special case of a duplex stream where the output of the stream is in some way computed from the input. These are also called through streams.

A good example of these is encryption and compression streams.

Hope I summed up all basics of events,streams and pipe function in node.js. I am glad medium is platform to share resources, knowledge from each writer’s perspective mind and to change the way of learning

To know more deep about streams, here’s the article from which I had taken some examples — https://medium.freecodecamp.org/node-js-streams-everything-you-need-to-know-c9141306be93

Resources : Beginning Node.js - Apress foundation, https://coligo.io/nodejs-event-emitter/ , https://medium.freecodecamp.org/node-js-streams-everything-you-need-to-know-c9141306be93 , https://nodejs.org/api/stream.html