Big O in JavaScript

Like many new developers before me, Big O went straight over my head the first time I heard about it. It was a topic that kicked in my Imposter Syndrome big time. Now that I’ve had some time to let the idea of Big O sink in, here’s a quick guide to help others with this nebulous topic.

What is Big O?

In short, Big O is the worst-case scenario growth curve for the complexity of an algorithm. Big O notation comes two-fold: Time Complexity and Space Complexity. This article will focus on Time Complexity.

Some common Big O notations include:

- O(n)

- O(n²)

- O(log n)

Let’s break down this notation.

The “O “in Big O stands for “order of”. And refers to the rate at which the function is growing.

The ’n’ is a variable that represents the size of the input. The letter ’n’ is a commonly used variable in Big O, but you can also see other variables being used, like ‘m’ in O(n*m). Using a second variable like ‘m’ means that there is another input in the function that is separate from ’n’. An example of an input in JavaScript would be an array where ’n’ references the number of elements in the array.

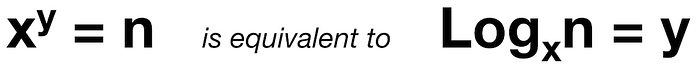

What is ‘log’? Perhaps like me, you haven’t heard this mathematical term since high school. Log, short for logarithm, is an inverse operation to exponentiation. For example:

This relates to Big O because if ‘n’ is fairly large and increases, our result ‘y’ becomes marginally higher, increasing by a constant amount. Which means that as our input ‘n’ increases, the time for the algorithm to run will increase at a constant amount rather than at the rate of the input. For example:

This is what makes O(log n) the sought after Big O for algorithms that deal with large datasets.

Big O Examples in JS

O(1)

As the input increases, time to run the algorithm stays constant.

Example:

const firstElemEven = (array) => {

return array[0] %2 === 0 ? true : false;

}O(n)

As the input increases, the time to run the algorithm will grow proportionally.

Example:

const hasValue = (array, value) => {

for (var i = 0; i < array.length; i++){

if (array[i] === value){

return true;

}

}

return false;

}O(n²)

As the input increases, the time to run the algorithm grows at the rate of it’s square.

This is frequently seen with nested ‘for loops’ because the inner loop will run ’n’ times for each time the outer loop runs. Which makes the resulting time complexity n*n or n².

Example:

const findMatch = (string) => {

for (var i = 0; i < string.length; i++){

for ( var j = i+1; j < string.length; j++){

if (string[i] === string[j]) {

return true;

}

}

}

return false;

}O(2^n)

For each additional input, the time to run the algorithm doubles.

Example:

const fib = (num) => {

if (num <= 1){

return 1;

}

return fib(num - 1) + fib(num - 2);

}O(log n)

As mentioned earlier, as our input ‘n’ increases, the time will increase by a constant amount. More significantly, an algorithm of O(log n) will half the input each time it iterates.

Example:

Binary Search Tree

O(n log n)

For each input, the algorithm is running an operation at O(log n)

Example:

Merge sort

How to determine Big O

Now that we have a better understanding of Big O basics, let walk through the steps in determining the Big O for an algorithm. Keep in mind that Big O notation is just an estimation so it’s not meant to be an exact calculation. Let’s use the following algorithm, which finds the index of the first appearance of a string inside of another, as an example:

function indexOf (needle, haystack) {

for (let hIdx = 0; hIdx <= haystack.length - needle.length; hIdx++) {

for (let nIdx = 0; nIdx < needle.length; nIdx++) {

if (haystack[hIdx + nIdx] !== needle[nIdx]) break;

if (nIdx + 1 === needle.length) return hIdx;

}

}

return -1;

}Step 1: Determine the input

Input can be an array, string, or even just an integer. In our above example, the inputs are two strings: needle and haystack.

Let’s represent needle with the variable ’n’ and haystack with the variable ‘m’.

Step 2: Evaluate each line and how the input is used

Going over line by line, figure out the time complexity for each operation.

Looking at our example we have our haystack input, ‘m’, in a for loop which means that every element will be checked. This give us an initial O(m) complexity.

Next we have a nested for loop where the needle is looped through. This adds the complexity O(n) for each element of the haystack, ‘m’.

Then there are two ‘if’ statements which take only indexes as inputs and therefore would remain constant in time complexity regardless if its input increased. This means the two ‘if’ statements evaluate to O(1).

Last we have a return statement, which also evaluates to O(1).

Step 3: Drop Constants

A constant is a fixed value in an expression and when calculating Big O we don’t need to include them.

So based on our evaluation we currently have O(n *m * (1 + 1)).

Since the 1’s are constant, we can drop those.

Step 4: Find the most significant notation

After dropping constants, then take a look at the remaining notation and keep the most significant. Ask, “What is the worst-case scenario growth curve?” So if you come across an algorithm that is O(n + 2^n) the resulting Big O would be O(2^n) because it is the most significant.

Using our example, we are currently left with O(n*m) so there is nothing more we can drop since as variables neither ’n’ nor ‘m’ is more significant than the other.

So the final Big O for the example algorithm is “order of n*m” or O(n*m).

Conclusion

Hopefully this post was helpful in breaking down Big O notation for you. Here are some additional resources you can check out (and that I checked out while writing this post) to learn more about Big O.

https://www.interviewcake.com/article/java/big-o-notation-time-and-space-complexity