How APIs Can Benefit From Computational Cost Equality

Definition

Let me coin a phrase for brevity:

Homogeneous API — API with equal computational cost of all requests.

What’s computational cost? It’s all resources, which are consumed to produce outcome, e.g. cpu time, memory, disk space, and network bandwidth. Let’s consider a service, which stores user-specific information, e.g. Medium. It has a reading list feature, which lets you save stories for later. If we design a backend to serve requests in a form of “give me all stories for user A”, the computational cost will vary from user to user, making API heterogeneous.

Now let’s take a look at the pros of making API homogeneous.

Advantages

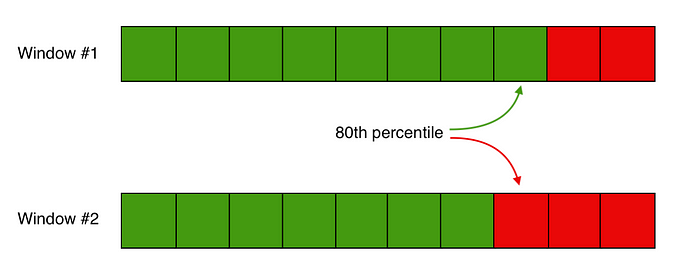

State-of-the-art observability

Deviation of heterogeneous API timings depends on how users behave, how much data they save and consequently request. Thus, if given percentile grow, it’s hard to distinguish, whether the users distribution changed or the service itself slowed down.

Conversely, users can’t mess up homogeneous system, therefore there is a higher correlation between service metrics and code pushes.

SLOs are easier to enforce

Since metrics fairly represent a service’s health, it reduces false-positive alerts, hence bringing less burden to operations team.

Happy users

With homogeneous API you can be sure that no user struggles consistently with your service. For example you can monitor a p999 trend to prevent SLA violations, but a “heavy user” would fall in p9999. Their experience might degrade over time and you won’t notice the problem unless they complain explicitly.

So, no more “heavy user” tickets, which are harder to investigate and impossible to prevent. Amazing, right?

Fault-tolerance configuration

To provide sane experience for everyone, you’re obliged to set timeouts and deadlines as big as the heaviest request takes. Although it will allow everyone to be served, lightweight requests might be stuck for an unnecessarily long time. If a request usually completes in under 100ms and timeout is 1000ms, the client will waste an extra 900ms on a network bleep. Lesser timeout would’ve let the request be retried and succeeded earlier. Having all requests of similar sizes solves this issue, allowing us to apply those patterns effectively.

How to implement

Making API homogeneous requires two steps:

Pagination

Instead of returning all, return N entities at most. Sadly, necessity to change clients is not the only cost which comes with pagination.

Another one is consistent editing problem. It’s likely to occur with explicit pagination, when a user can see page numbers and navigate over them. The simplest approach to implement pagination is to calculate static indexes via “skip” and “limit”, thus page number P of size S would have indexes (math notation):

(S*(P — 1), S*P]Now, imagine a situation, when a client deletes an entity from one page and goes forward:

Since the user deleted entity #3, the entities from page #2 shift to the left, effectively losing entity #4. To observe it user has to go backwards or reload a page after deletion. Instead of fetching pages by statically calculated indexes, retrieve S entities after N, where N is an identifier of last entity at current page. When jumping more than one page away, calculating indexes is fine.

Choose page size wisely, setting it to 1000 if median objects count is 25 won’t make an API homogeneous. Examine clients to figure out how many objects can be observed on the screen at the same time. Generally, multiplying this number by 2 to 5 gives a reasonable page size.

Getting back to the Medium “reading list”: if you take a closer look at it with network inspector, you’ll see an implicit pagination (pages are being requested as you scroll down) with page size = 10. So, their “reading list” API is homogeneous (others probably too, but I didn’t collect any evidence)

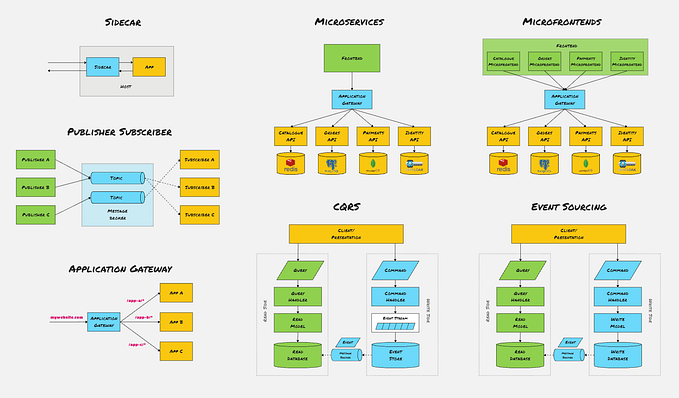

Isolation

It’s a paradigm where every service serves one and only one purpose. For example, if you have a service with 2 endpoints, restricting their thread pools won’t give perfect isolation. Today, 60/40 ratio represents user distribution, but tomorrow’s workload might change and one of the functions would starve. Plus, it leads to unnecessary underutilization.

Isolation is easier to achieve if you have an application template and matured CI/CD/deploy infrastructure.

I am aware of specific domains, where making api homogeneous is impossible or insignificant. To name a few — query engines and math evaluation programs. Nonetheless, I believe it’s useful and feasible for most web scenarios.