JavaScript Starter Architecture, Part 2: The Polyfill Potion

You will remember from my first article, JavaScript Starter Architecture For the General Website, I stated clearly that while I am an expert software developer, I am only now trying to use JavaScript for something more serious than just a bit of code here and there for trivial things — usually employing jQuery for the cross-browser heavy lifting. While I have used JavaScript for some time, I have never truly engaged in the language and the environment fully. This article continues from the last, and fills in some rather important information I did not even know I was missing originally.

Warning: What follows is a personal voyage of discovery on the topic of polyfill. In some places I left in the blood and gore of the nastiness that is the web browsers our existence relies upon. Hopefully this will provide good context to helping you decide on how you will polyfill, and if you find yourself in a similar situation of discovery this can save you a few gray hairs.

Transpilation vs. Polyfill — They work together — It is not a competition!

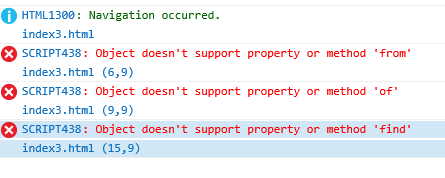

Originally, I believed that by simply using Babel all sorts of glorious magic would happen and any modern JavaScript I wrote would instantly working on any browser. Magic, being what magic is, failed me when I used some new method on Array that is not supported in Internet Explorer. Once discovered, I had to stop and figure out where I went wrong.

The reality is that Babel at its core provides transpilation by taking the syntax of modern JavaScript and transforming it into older standards a given set JavaScript execution environments can parse and execute. What Babel does not provide is automatic inclusion of new built-ins, classes and methods. Usually you will discover this when you run your code on Internet Explorer and watch it fail. (Sure, I along with every other developer in the world would love to forget Internet Explorer ever existed, but we are not quite there yet in the marketplace.)

To ensure access to this set newer JavaScript functionality, you will need to use what the JavaScript world calls a polyfill. Polyfills add to your JavaScript environment any of the new built-ins, classes and methods you might want or need. The JavaScript community is very vibrant in this regard, and, as new standards are proposed, polyfills are written to provide ubiquitous access.

There are many ways you can include Polyfills onto your website or project, and that is what the rest of this article is going to talk about.

Polyfill The Quick and Dirty Way: Polyfill.io

First, you can sledge-hammer them into place by simply adding this script tag as the first script tag your web page loads:

<script src=”https://cdn.polyfill.io/v2/polyfill.min.js"></script>This will use polyfill.io’s CDN service to provide your website visitors just the polyfills for functionality not found in their browser’s JavaScript implementation. They do this by using the UserAgent header to understand what functionality is missing and avoid sending excess code for items the environment already supports.

The biggest downside to this method is if you have many users on Chrome, Firefox and other very modern browsers where support for the latest standards are being closely maintained, you are still forcing each of them to perform a blocking network load for no actual benefit. I would only use this as a solution if site load time was not a focus for the given website or as a rapid-response “band-aid” any time a missing polyfill is causing site issues; I would throw the script tag on the site to restore functionality while I investigate exactly what I am missing.

Another consideration here is also the reliance on a 3rd party site for the functionality of your website. If their CDN has any type of failure, your website is going to have issues. This particular concern could be fixed by running your own copy of their open source service.

Polyfill: The Quick, Less Dirty, Less Harmful by Selective Loading

For me and my websites, I fundamentally care about site load time and I do not want to introduce any additional blocking JavaScript calls if I can avoid it. Specifically for my users who use a modern browser which already supports the extra functionality I need from them, they will not incur additional delay in page load. To do this, I will employ a technique known as “Feature Detection”. Here is a code snippet you can place before any other JavaScript on your page to use Feature Detection to selectively load the polyfill:

<script>

// Implement Feature Detection

if ( ! "from" in Array || ! "fetch" in window ) {

document.write('<script src="https://cdn.polyfill.io/v2/polyfill.min.js"></sc'+'ript>');

}

</script>In this snippet, I am looking for Array.from and fetch support — if I do not find them, then I just load the polyfill for that browser. You could make this more efficient by looking at polyfill.io’s website for extra parameters you can add to tell it to only send the specific polyfills you need. Because this <script> tag is on our page as the first JavaScript code, and because it is being executed immediately (blocking DOM parsing) the document.write will cause the Polyfill to be the next thing loaded — blocking DOM parsing even further until it is done. This is actually what you want because you want the polyfills in place before proceeding to other JavaScript on your site — at least that is what you logically want, but we can still improve upon this.

Note: Mozilla Developer Network has a wonderful article with a whole lot more information on Implementing Feature Detection should you wish to know more details and other methods. Especially important on this page is the table titled “Summary of JavaScript feature detection techniques” as it shows proper ways of detecting functionality.

Warning: There is danger, danger in using document.write to load another script tag. I have read some vague comments that it disables certain optimizations in some browsers, but outside of an MDN document talking about Firefox I could not find any information — and the Firefox one does not affect what we are doing in our document.write. A bigger risk is that Google Chrome, on bad networks, may intervene and fail to load and execute your script at all! If you ensure that the polyfill is loading from the same domain name, however, then they will always execute it — so that is one way to protect yourself. Just watch out for web sites loading the main document from a primary web server and the rest of the resources from a CDN on another domain!

Polyfill: Still Quick, but Clean way: Selective Loading with Onload

The whole point of polyfilling is to allow us to use all sorts of new fangled things safely across all browsers. Sadly, in this next snippet of code, we are going to have to write browser safe code because we do not yet have those polyfills!

<head>

<script>

// Google-style queueing function for the polyfill completion

window.pf = window.pf || function () { (pf.q = pf.q || []).push(arguments) }

// Load polyfill.min.js and register pfdq as our onload cb

var script = document.createElement('script');

script.src = 'https://cdn.polyfill.io/v2/polyfill.min.js'; // Function to dequeue everything once the polyfills load

script.onload = function () {

// Copy the q locally

var q = 'q' in pf ? pf.q : [];

// Set the queueing function to immediate execution

window.pf = function (x) { x(); };

// Iterate through the queue

for (var i in q) {

// Don't let exceptions from the execution of one queued method stop the rest from executing

try {

q[i][0]();

} catch (e) { console.error(e); };

// XXX: console.error above not browser portable!

}

} document.head.appendChild(script);

</script>

</head>

I have commented the code above with what each step is doing. The short version is: 1) Simply, setup a Googlesque style queue system that can enqueue things while the polyfill is loading. Once the polyfill load completes, the onload will set the pf() function to immediately execute any future functions past to it (instead of queueing it), and it then executes all of the previously enqueued functions in the order they were enqueued.

Alternate Thought: I considered using JavaScript events and having the onload trigger an event — but then you would have to deal with race conditions if something registered with the event after the fact — that is when I decided to take advantage of the Google pattern above. You could use the same pattern while using events under the hood, but at that point it does not save you much.

Big Caveat — Warning!!! The document.head.appendChild causes the script to be run as if you set the async attribute — regardless of how you set the attributes. This causes a big problem if you expect your called back code to run before DOMContentLoaded and its associated start of rendering. In fact, because we are incurring a network hit, I almost guarantee any code executed in this fashion will be delayed until after rendering has started. If any of your code modifies the appearance of anything above the fold, your visitor will experience a brief flicker.

This annoys me because I prefer to execute fast bits of JavaScript in-line during DOM processing if they affect the visual appearance of the page because I dislike flicker so much. When I have some future moments of sanity, I will come back to this problem and attempt another pass at solving it. Or, if someone out there has more insight, please reply and let me know!

One work-around technique to this flicker issue you could employ, on pages where you know it could be a problem, is the async-hide technique Google put forth for Google Optimize. Otherwise, the only current way around any type of visual flicker is to stick to the document.write method.

It is distressing to me that document.write is the only way I can affect a dynamic load of JavaScript in a blocking fashion. I want to be a good JavaScript develper and not use crusty-old document.write, but the Browsers made me do it! Really! I tried all manner of trickery using document.createElement and inserting it into different parts of the DOM, but every time it loaded it asynchronously and the rest of my JavaScript continued to run before the polyfills were available.

Eureka! (Or so I thought?)

I was proof-reading, and I tried again to find a solution. I found more information that clearly states, when injecting scripts in this fashion, scripts are by defaultforce-async, but you can disable this with script.async = false;. This ensures that the script you are injecting is loaded from the network and executed synchronously before any other <script> tag with a src element…

I was about to put a big, long example demonstration here of this working, and then I loaded my example in Chrome and it failed miserably. It just will not work. Chrome absolutely does not want you to be able to choose to dynamically load a script at run time and block other scripts. It is clear from a bug report for Chromium. I am therefore going to stop looking. Two funny things from this exploration: 1) Microsoft Edge does the right thing. 2) The Google Chromium bug report ends with:

document.write is generally considered to be an undesirable feature of the web platform, so there is a desire to see it used less rather than more.

This is me looking at them incredulously. I feel like I have a legitimate use case wanting to only affect those who need affecting and not those who do not. That requires a dynamic decision and injection. In theory Chrome would never need a polyfill and it would just not be important, but in practice I will not put something in which could fail in the future.

Just Load Them — Stop Trying To Be Smart!

Maybe it is just better to load the complete set of polyfills you require for your website to support all of your required browsers, always and every time.

Using my architecture from my first article in this series, you could simply npm install the polyfill of your choice (babel-polyfill is one such option) and either conditionally or always require them in your entry point scripts. This increases the size of your single site script, but you can minimize that by only adding the polyfills you need.

The key here is that, even though everyone will download the polyfills you need, none of your visitors them incur any additional network round-trip impact. (And you also do not go crazy mad trying to make browsers do something they do not want to do.) It is something to consider at least, and, for the architecture of having a single _site.js for all of my on-every-page scripts, it is the likely way I will go.

What Polyfills Do You Need?

This is an interesting question, and it is one that I currently have to answer with “you have to look at your code and know what you are using that is not in a browser you support”. That is not an easy answer by any measure of the imagination. The second answer is “you have to look at the error reports from your complete browser testing suite which you run on every browser you support”. (Yes, my tongue is heavily in my cheek now!)

The sad fact of the matter is that there is no static analysis tool which can take a browser list and evaluate your code to tell you the exact set of polyfills you need. I have thought about starting up such a project that would use the raw data from caniuse.com to help give a best-guess. (It would be best-guess because non-static typing makes it difficult to do accurate detection of the use of specific methods.) I saw a project talking about possibly doing this, but cannot find the reference now…

Alternatively you could write and load a modified polyfill which tracks which ones get used, run your really-complete unit tests and use that as a discovery process for your list of polyfills. This may be something that I do as it seems much easier to work with than attempting static analysis of JavaScript.

Endings

Anyway, I hope this helps you if you too are in the early stages of understanding the modern day JavaScript build ecosystem. It certainly has been an adventure for me, and writing this article has cemented much more of the inner workings of dynamically injecting script tag than I ever imagined I would learn.

This concludes my second article, and I look forward to discovering what my next topic in this series may be. I have been evolving my framework on the actual website I am driving, and I may bring those updates to you as a third installment. As always, I am open for comments and look forward to hearing any information I got wrong or which can be improved. Thank you!