Load Balancing a Go Rest API using Docker, Nginx and deploying on DigitalOcean

What is Load Balancing?

Load Balancing is a topic which often comes across to beginner developers as a difficult thing to achieve.

In a non-load-balanced setup, we have a client that directly accesses our API. This works well until the load starts to increase on a single instance. To counter the load, one solution is to do vertical scaling.

To achieve vertical scaling, we increase the CPU/RAM of the system. Vertical scaling hits a dead-end when the system is already running on the most powerful hardware configuration. Scaling beyond this is not only infeasible but also impossible.

You will have to eventually switch over to a horizontally scaled system to handle more load. As visible in the diagram, the load-balanced instance supports as many servers as required according to the load.

The client request hits the Nginx and is then forwarded to one of the server instances in a round-robin fashion. This helps us to evenly distributed the load. As the demand increases, we can increase the instances at our will without facing system downtime.

Prerequisites

- Docker — Ubuntu, Windows

- Docker Compose — Ubuntu, Windows

- Golang — Ubuntu, Windows

- A little knowledge of Docker and Golang will help you understand better.

In this blog, you’ll learn:

- Creating your own minimal Golang API

- Dockerizing the API

- Creating a Docker Compose file to control the instances.

- Add Nginx as a reverse proxy and load balancer.

Once this is done, you can move on to learning how to deploy your API on DigitalOcean.

Feel free to move to Section 3, if you have already set up and dockerized your own Go API.

The techniques presented in this blog are modular and you’re free to switch Go API with a NodeJS or a Flask API. The process of load balancing the API remains the same.

With the formalities done, let’s get started!

Section 1: Coding up the API

Code

Create a new directory and inside it create a new file main.go . Write the code given below inside the file.

Throughout the blog, the code has been commented thoroughly so you can understand it better.

Testing your API

- In a command line, type

go run main.go - Open your browser and type

localhost:5000/hello - You’ll receive the response “Hello world!” in the browser and the console should look like this.

Code until Section 1: https://github.com/zerefwayne/load-balancing-go-api-nginx/tree/section1-api

You have successfully set up a very minimal Golang REST API and are ready to dockerize it.

Section 2: Dockerizing the API

Dockerfile

In the root of the project, create a new file with the name Dockerfile in the folder and add the following code to it.

Testing the Docker image

Open the command line and type the following command to build a docker image of your project and run the following command:

docker build -t loadbalance-api .

The . at the end is important as it points to the current directory.

The last line of the process should be Successfully tagged loadbalance-api:latest This means that the image has been successfully built. You can check out the image using docker images

To run a container of the following image type the following command in the command line:

docker run --name api --rm -p 5000:5000 loadbalance-api

Breaking this docker command down we have:

docker run — Used to start a container

--name api — Sets the name for the container

--rm — Once the container is stopped it will be removed from the memory

-p 5000:5000 — This exposes the internal 5000 port to your localhost:5000. <localhost port>-<container port>

loadbalance-api — The name of the image you wish to run

Once you execute the command, head over to your browser and go to localhost:5000/hello You’ll receive a “hello world!” response and the command line will look something like above.

You have successfully created a docker image of your API and now can move onto the docker-compose section.

Section 3: Docker Compose

Docker Compose is an application which is used to automate the start-up and shutdown of an application consisting of multiple containers. This is necessary as we will be using Nginx and create multiple replicas of our API.

Using Docker Compose is as simple as creating a docker image. Create a docker-compose.yml file in the root of your project and type the code given below.

docker-compose.yml

Running Docker Compose

Once you’ve created the docker-compose file, run the following command in your terminal: docker-compose up --build

This command starts up the application using docker-compose.yml configuration. The --build conveys that whenever we start the application, the images are built again before deployment.

We have configured docker-compose and can move ahead to putting the API containers behind Nginx.

Section 4: Using Nginx to Load balance the API

Nginx is an application which is used to serve static webpages, working as a reverse proxy and a very efficient load balancer. Nginx works using nginx.conf just like Docker compose uses docker-compose.yml

Create a folder nginx in the root of the project and inside it, create nginx.conf and write the following code.

nginx.conf

Updating Docker Compose

Just like we registered our Go API, we’ll write the configuration to start an Nginx container as well.

Update the docker-compose.yml with the following code.

You’ll notice a subtle change in line number 7. However, that change is done due to a significant concept here. The 5000:5000 has been changed to just 5000 .

The 5000:5000 in simple English means “Bind the 5000 port of a container to the localhost:5000” This works pretty well for a single instance of an API. However, when we wish to start multiple instances of the API, 5000 the port of the localhost is available to just one instance. Others will throw an error of port 5000 not available.

To solve this issue, we shorten it to just 5000 . This starts up the containers by assigning random addresses to them. One doubt has to arise here that if the ports are random, how does Nginx figure out which address to forward the request to?

The answer lies in a pretty neat feature of Docker: Internal DNS Service.

In easy words, you give the DNS the name of your container and it’ll return the IP of the container. As you can see in line 11 of nginx.conf, proxy_pass http://api:5000;

Notice that api here is the name of our container that we listed in docker-compose.yml

Nginx asks the DNS service to resolve the IP of the container api and hence the request is forwarded.

Testing the deployment

PS: Make sure you are running docker-compose v1.23+

To run the application, run docker-compose --compatibility up --build

Once the services have started, head over to your browser and go to http://localhost:80/hello and click refresh multiple times.

You’ll notice that docker-compose assigns colours to instances and each request is being sent to a separate API instance. If you get this output, hurray! You’ve successfully a load-balanced API using technologies like Docker, Docker Compose and Nginx.

One significant part of any application to work is to deploy it, in the upcoming section, we’ll deploy it on a cloud server. I prefer to use DigitalOcean for it is one of the most elegant and powerful solution to deploy your app within a few steps.

Setting up Droplet on DigitalOcean

A Droplet on DigitalOcean is analogous to an Amazon EC2 service. In other words, it is a simple computer running for you in the cloud just like the one you’re working on right now.

Provisioning a Droplet

Head over to Digital Ocean, create a new account. Once successfully signed up, create a new project with the following configurations and hit Create Project

Click on Create a new Droplet and with all the default settings, launch the new droplet as it is.

Once launched, the dashboard will look something like this

The droplet is ready to use!

Click on the droplet and search for a Console option. This is a feature to run an online command line directly connected to your machine. The username is root and it’ll ask you to set up the password when using it for the first time. Please check out online forums in case you’re not able to log in. One cheeky trick is to reset the password from droplet settings. It’ll mail you a new password which you can then use to reset the password to the one you like.

This is the way your cloud console should look like when everything is set up correctly. Now we move to install all the necessary softwares required.

Deploying the app on DigitalOcean

Install Docker and Docker Compose on the machine using the tutorials given below.

Installing Docker

Installing Docker Compose

Once installed, we have to now fetch our API from Github.

Fetching the project from GitHub

You can upload your code to a GitHub Repository and clone it here, or you can simply type: git clone https://github.com/zerefwayne/load-balancing-go-api-nginx This is the repository that I had created for this course.

Running the API

cd into the project folder and type the following command again.

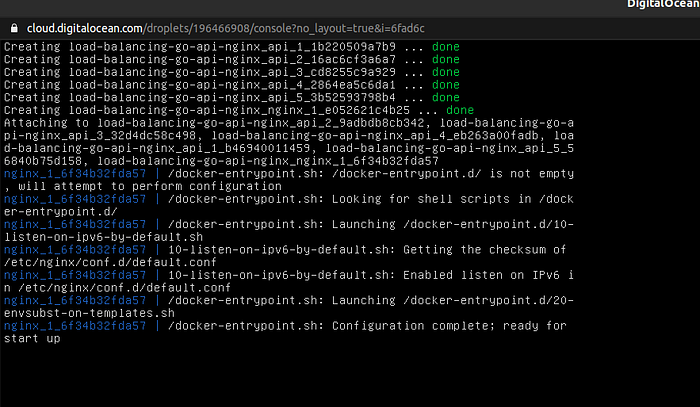

docker-compose --compatibility up --build

If your console looks like this, this means we have successfully run our project on the cloud. Now comes the most important and last part of our blog, testing the API deployed on the cloud. Keep the console window open while testing out.

Testing the Load Balancing

In the digital ocean dashboard, you’ll see an IPv4 address of your machine. Instead of localhost, we have to use this <ip>/hello

Finishing Up

Congratulations!

You’ve successfully learnt how to implement a Go API, dockerizing it, compose it, use a load balancer and finally deploying it on the cloud.

Having learnt the concepts in this blog, you are now empowered to use an API in the languages your choice. The s is bold because it indicates that you can create multiple API services in any language and compile them and load balance them using intermediate nginx and docker-compose concepts.

About the author

Aayush Joglekar, final year B.Tech. student at IIIT Allahabad, India majoring in Information Technology. He has previously interned at Zauba Cloud as a cloud-native engineering intern working primarily with Go and MongoDB and Wingify as a front-end engineering intern. He likes to work with Go, NodeJS, MongoDB, PostgreSQL and VueJS to create a beautiful frontend for the softwares.

Feel free to get in touch at aayushjog@gmail.com and follow more projects on Github.