Multi Core NodeJS App, is it possible in a single thread framework ?

It has been a while since i wrote my last article, it has been very busy dates since then, but also it has been a great journey, because i have been involved in very interesting projects, also i moved onto another city, among other things, but now, i want to write about one project that i have been involved, and i decided to make space in my agenda to share, some of my experiences working with node, and it is something to be related with, multi core nodejs app, parallel functions, monogdb … ftp … ¿ ftp 🙄 ? yes ftp.

But first things first, before we dive in into what all this fuzz is about, lets check out some definitions.

What is a core ?

In relation to computer processors also called the CPU or Central Processing Unit, a core is the processing unit which receives instructions and performs calculations, or actions, based on those instructions. A set of instructions can allow a software program perform a specific function.

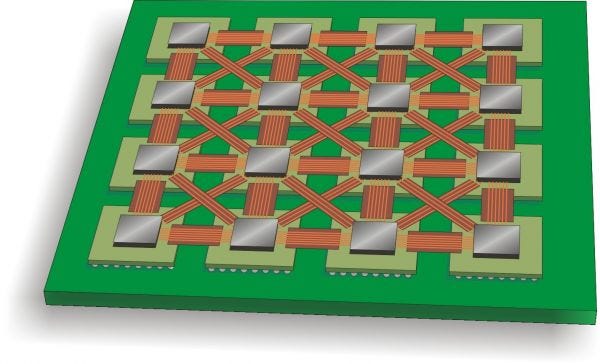

Processors can have a single core or multiple cores. A processor with two cores is called a dual-core processor and four cores is called a quad-core processor and so on. The more cores a processor has, the more sets of instructions the processor can receive and process at the same time, which makes the computer/server faster.

What is parallel computing ?

Parallel computing focuses on distributing tasks concurrently performed by processes across different processors. In a multiprocessor system, task parallelism is achieved when each processor executes a different thread (or process) on the same or different data.

In the simplest sense, parallel computing is the simultaneous use of multiple compute resources to solve a computational problem:

- A problem is broken into discrete parts that can be solved concurrently

- Each part is further broken down to a series of instructions

- Instructions from each part execute simultaneously on different processors

- An overall control/coordination mechanism is employed.

The computational problem should be able to:

- Be broken apart into discrete pieces of work that can be solved simultaneously;

- Execute multiple program instructions at any moment in time;

- Be solved in less time with multiple compute resources than with a single compute resource.

The compute resources are typically:

- A single computer with multiple processors/cores

- An arbitrary number of such computers connected by a network

Now that we have got some vocabulary lets see how can a commonly named single thread framework, manage this type of scenarios.

Multi processing with Nodejs is something that not many developers use in its workflow, or consider it to use from the begging when its time to develop an application, but multi processing is something that is baked into the core of nodejs.

Node is named Node to emphasize the idea that a Node application should comprise multiple small distributed nodes that communicate with each other. — @ Samer Buna

To get multi processing in nodejs, there is a cluster module that provides it out-of-the-box to utilize the full CPU power of a machine. This module also helps with increasing the availability of your Node processes it includes an option to restart the whole application with a zero downtime.

If you are not very familiarized with the nodejs cluster module i recommend you to read the following article:

Or if you are already familiarized or curios in how this will end 😁, then you are ready to continue ✌🏼.

What i will be showing you is a log parser app, this app retrieves information from log files that a CDN (content delivery network) deposits into an ftp server, our app will connect to the ftp server, then it will start to download log files if exists, our app will listen when files are being download so it can start to parse the logs, once parsed this information will be saved into a mongodb database, and then it will move the already download logs in the ftp server to a backup folder and then it will delete the download local logs parsed, and and … 😰 uff, it seems to bee a very heavy challenging task for a single threaded framework to do the job.

To achieve that, our task needs to be well defined to work smoothly, without suffering, and as good developers we need to separate all the micro task that we can detect, our job is to design a solution for it and test it, yes testing 😒 … testing, testing everywhere anytime anyone 😁, so that any task can be independent from each other, ¿ why we need to split it into micro task all the describe above ?, because we are going to use the nodejs cluster module, so it can take all the power from the computer/server where is going to be running.

Before to jump to the code, lets see what strategies we are going to use to achieve this job. Because of we are going to use the nodejs cluster module , we can scale our app to increase its availability and tolerance to failure. So to successfully scale our app we are going to implement two strategies:

Decomposing

We can scale an application by decomposing it based on functionalities and services. This means having multiple, different applications with different code bases and sometimes with their own dedicated databases and User Interfaces.

This strategy is commonly associated with the term Microservice, where micro indicates that those services should be as small as possible, but in reality, the size of the service is not what’s important but rather the enforcement of loose coupling and high cohesion between services. The implementation of this strategy is often not easy and could result in long-term unexpected problems, but when done right the advantages are great.

Splitting

We can also split the application into multiple instances where each instance is responsible for only a part of the application’s data. This strategy is often named horizontal partitioning, or sharding, in databases. Data partitioning requires a lookup step before each operation to determine which instance of the application to use. For example, maybe we want to partition our users based on their country or language. We need to do a lookup of that information first.

Nodejs makes it easy to implement it, so now fellows its time, its time to get hands dirty 🖐🏽 .

# Building the app

So lets divide the task as follows to fulfill the job.

- Server — will handle the cluster and the main processes.

- Parser — will read the logs to parse the information needed.

- GeoIP — will obtain the locations from a given ip retrieved from the logs.

- Database — will save the information parsed to the database.

- DeleteLogs — will delete all downloaded local logs.

- MoveLogs — will move files from one folder to a backup folder in the ftp server.

As you can see there is a lot of management, so lets if nodejs can do it well.

So now lets start by reviewing the server.js file, i will split it in 3 parts, because its a little bit lengthy.

Part 1: libraries and refactored methods

Things to notice in this block of code:

- refork method, here we are listening to the workers if for some reason they crash, exit or close, so we can refork them to the cluster to be available to do their corresponding job.

- readLastFiles method, this method is triggered when the ftp connection download all the log files, and if there were files reaming to be parsed this method will watch the file system to look if there are files.

- readBackup method, this method is triggered after the readLastFiles is done, the readBackup method reads backup files generated when a failure occurred with db connection and save the records in backup files so this method will retry to insert the records to the db.

- openFTP method, as its name say it is used to connect to the ftp server.

as you can see in this first block of code, i am importing readLogFiles, saveToDB, moveAndDelete, geoIP, all this modules where designed following the decomposing and splitting strategies to be capable to use all the cores of our machine, an create a multi core nodejs app :D.

Part 2: Main processes

Things to notice in this block of code:

- Here we are in the Master Cluster Scope, here we init all the main processes.

- We listen to the messages that are sent to the workers and execute their corresponding logic.

- We download the log files from the ftp.

- We set time interval for checking the new log files in the ftp server.

Part 3: Workers logic

Things to notice in this block of code:

We need to set the corresponding scope for each worker, and the the corresponding logic for each of them.

- Here we define the logic for each worker, depending on the worker it will call the module imported to do the job that its needed in a core and then send the result as an event to the worker that its listening the message event and execute the final logic to distribute the work between the workers so we can fully take advantage of all the resources of the server.

- If some of the workers received an error or something wrong happened we exit the worker, and the reFork method will refork the worker to the cluster.

Nodejs cluster module is based on the events paradigm as you may notice.

# Testing a multi core app structure

We can not test directly the code above because it needs the rest of the modules and unfortunatly i cannot share it with you because the code belongs to the company as well as the connection to the ftp server, but i will show the basic structure for a multi core nodejs app so you can test the cluster module.

This is the basic structure to test the nodejs cluster module, there is an important thing to notice, you can create as many workers as many cores your server/computer has if you create more workers than the existing cores, it will still run the app but it may present undesired behavior.

## Final comments

So this article shows you how to take advantage of all your server resources using a nodejs app, this still have some caveats because of the event loop philosophy, but the V8 engine is to powerful so it can let us use of all the cores by using the cluster module.

# Thanks for reading

👨🏻💻👩🏻💻 Thanks for reading! I hope you find value in the article! If you did, punch that Recommend Button 💚, so that more people can find it in the web, recommend it to a friend, share it or read it again .

I’m doing this to share my knowledge to the community, but also I’m doing this to LEARN. Your feedback and corrections are absolutely welcome!

In case you have questions on any aspect of this article or need my help in understanding something related to the topic, feel free to tweet me or leave a comment below.

¡ Hasta la próxima ! 😁 ✌🏼

You can follow me at twitter @cramirez_92

https://twitter.com/cramirez_92

Or we can connect at Linkedin

https://www.linkedin.com/in/cristian-ramirez-8982177a/