Protocol Buffers, Part 1 — Serialization Library for Microservices

Over the last years, Microservices have become a common way to tackle the challenges of building bigger projects. However, once we split our system into separate services, we face a new set of challenges that we didn’t face before when dealing with monolithic architectures. One of those challenges is how to serialize data between the services in the system.

While there’s no single definition of Microservices, one short definition that I find on-point is the one made by Sam Newman:

Small autonomous services, that work together, modelled around a business domain.

Sounds good, but how do we maintain “autonomy” of individual services while effectively “working together”? Turns out libraries like Protocol Buffers, Avro & Thrift can help in this endeavor quite a bit. I’d like to share my experience building Microservices with Protocol Buffers (A.K.A. protobuf).

Depending on your background you might ask yourself why should we consider switching whatever serialization method that worked for us in our monolithic architectures so far. In order to answer this question I’ll try to establish a case why Protobuf has a better fit for Microservices.

Let’s start by explaining what Protobuf is. From Wikipedia:

Protocol Buffers are a method of serializing structured data. It is useful in developing programs to communicate with each other over a wire or for storing data. The method involves an interface description language that describes the structure of some data and a program that generates source code from that description for generating or parsing a stream of bytes that represents the structured data.

I would argue that it is the schema / IDL (interface description language), and its prescribed ability to evolve (A.K.A. “schema evolution”) which makes Protobuf especially suitable for Microservices.

- Schemas boost the effectiveness of API communication across teams, thus satisfying the “working together” aspect.

- Whereas schema evolution allows exposing a schema without that being at the expense of the aspect of “autonomy”.

I.e. using Protobuf each service may expose an explicit API, while maintaining the ability to roll-out changes to this API without any lockstep deployment of all of its dependent services.

It’s worth to listing a few more attributes of Protobuf serialization that come in handy with Microservices:

- Cross language support (Java, Python, Go, C++, C#, Ruby, Objective C).

- Optimized binary format for serialization speed, and message compaction.

- Has support for JSON format as well.

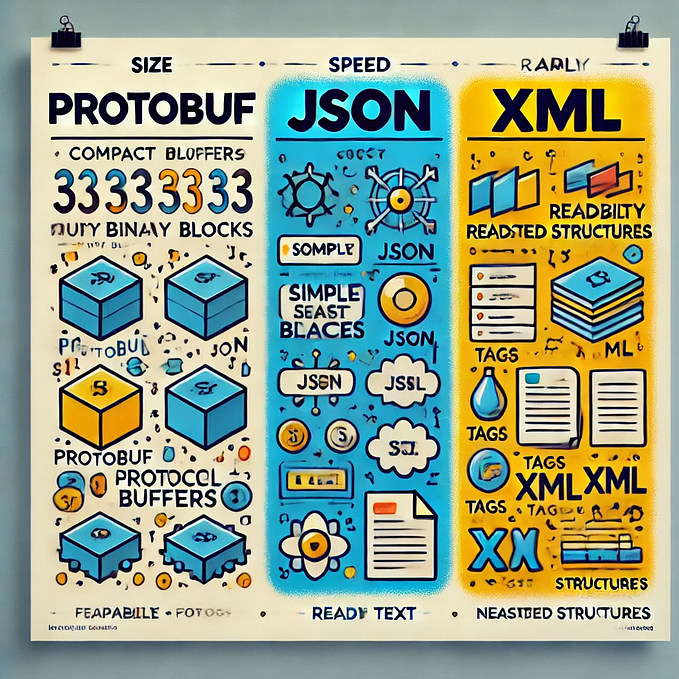

Now we can contrast using Protobuf with two common alternatives:

- JSON — Allows for cross language support and schema evolution (if you know what you are doing), but lacks the notion of schemas as a clear API, and can be too slow for some use cases.

- Built-in language serialization (for example, Java serialization) — Contains a rigid schema that is not amenable for evolution, and isn’t cross language.

The full code example can be found at — https://github.com/rotomer/protobuf-blogpost-1

As a case in point, assume you are building a backend Microservice for provisioning a VM (much like my team does at CloudShare).

A (bit simplified) command message to provision a VM would look like this in Protobuf IDL:

You can find more info on the Protobuf IDL language in the official guide. For now, we can see from the code snippet that we have defined a ProvisionVmCommand message composed of 3 fields: id, region & vm_spec.

Next, we run the Protobuf compiler which generates Java message classes that will be used in our code. This can be done quite easily as a pre-compilation step via Maven / Gradle plugins.

Now, let’s define a VmProvisioningService that will receive a binary serialized format of this command via some messaging system (Message Queue or Pub/Sub), deserialize it, and will “provision a VM” accordingly.

Finally, we’ll create a test to act as the client code that invokes the VmProvisioningService by creating a ProvisionVmCommand, serializing it and sending it to the service (in reality the call will be made via message passing transport rather than in-process method call).

Where’s the punch?

Much like the cute mushrooms in the picture above, it isn’t obvious what punch Protobuf actually packs (oh, these mushrooms do pack a punch).

As we can see, the code needed to serialize to / from a byte array is fairly simple, but that is the case in many serialization libraries. The main benefit from using Protobuf is being able to provide a schema to the client code, while being able to introduce changes to that API without forcing the client to update its code.

In other words, consider a scenario where a new version of the VmProvisioningService is deployed with another (optional) field added to the ProvisionVmCommand — say: availability_zone. When using Protobuf the client code doesn’t need to be updated and deployed in lockstep mode. The client code can continue using the original Protobuf message definitions and send messages according to the original schema — the VmProvisioningService will parse them just fine. The client code can eventually update at its own pace.

This means that we gain the autonomy to have a separate deploy cadence for each Microservice — just as we wanted — without sacrificing the effective collaboration between services which is boosted by exposing a schema.

Next posts in the series

Be sure to check the next posts in the series:

✉️ Subscribe to CodeBurst’s once-weekly Email Blast, 🐦 Follow CodeBurst on Twitter, view 🗺️ The 2018 Web Developer Roadmap, and 🕸️ Learn Full Stack Web Development.