Why you should care about a KB?

I started my career as a developer few years ago and frankly, I didn’t care (enough) about hardware back then.

I always thought software development was all about writing a working code which should have best algorithmic performance (Big-Oh) and compiler will take care of other optimisations.

I couldn’t have been more wrong.

Let’s dive into one of the most sophisticated yet often ignored piece during programming : CPU Cache.

What does speed have to with storage?

Everything. The major bottleneck these days is not the CPU speed but the memory access speed. Have a look at these numbers below by Jonas Bonér.

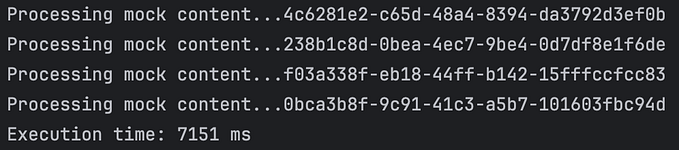

A simple main memory reference takes 100ns. This may not seems like a lot but when you are developing for more than 10million RPM, this means you are spending 1 second every minute just to get a reference from memory. Reading from memory is a whole another pain.

However, if you notice L1 and L2 cache reference takes a lot less time (0.5 ns and 7 ns respectively) than memory. But, why is that?

It’s because in the CPU, L1 and L2 cache reside closer to CPU core than RAM. Apart from that, caches generally use SRAM (Static RAM) which is much faster but costlier than DRAM (Dynamic RAM) used in memory.

A 4 core processor today consists of L1 Instructions cache and L1 data cache per core. It then contains a L2 cache per core which holds both Instruction and Data.

Sometimes L2 cache is shared between a pair of cores. L3 cache is the common cache for all the cores and finally we have RAM. All the data which resides in L1 cache will be there in the higher levels of cache and memory. Same goes for L2 and L3.

Let’s put everything in cache

That’s great. Let’s see how much data you can store in each of these caches. Below are the number of from my Macbook Pro (Early 2015 edition) with a Core i5 processor:

hw.l1icachesize: 32768

hw.l1dcachesize: 32768

hw.l2cachesize: 262144

hw.l3cachesize: 3145728

Okay. So my modern processor on which I can run nearly everything without a lag has only 64KB of L1 cache per core, 256KB of L2 cache per core and 3MB of L3 cache. As a 24 year old, I never had to care about a few megabytes in my life.

And now I have this daunting task, to fit my program and my objects within 3MB. To give you the context, a normal Java Thread starts with a stack size of almost 1 to 8MB. (Even the latest i7 processors contain only 12MB of cache)

An immediate solution is to write fewer lines of code which will reduce the number of instructions which need to be cached. But that ‘s not always a feasible option. Let’s first understand some basic cache concepts.

Cache lines

Cache lines are the unit of access in a cache. When a value is fetched from memory to cache, it’s not a single value but multiple adjoining value equal to the size of the cache line.

Modern cache lines have a size of 64 bytes. Same is true for my Macbook Pro. A simple sysctl -a | grep cacheline will yield

hw.cachelinesize: 64

So CPU fetches 64 bytes from memory to cache and writes the same number of bytes to the memory. This means if you have an 4 byte integer array whose value will lie close to each other, CPU will fetch 16 integers at once from this array.

This means that a linear traversal through array is much faster than it actually seems.

“Linear array search can beat log2n searches of heap-based BSTs.

Big-Oh wins for large n, but hardware caching takes early lead.” — Scott Meyers, Cpu Caches and Why You Care

This is also the same reason if you create a 2-D matrix, it’s row major traversal will be faster than it’s column major traversal. A row is stored in consecutive memory locations and is thus fetched in a cache line.

A cache is also smart enough to prefetch the previous or next cache line required, depending upon the instruction. So, the more predictable is your access pattern the better will be the performance..

Cache invalidation

So you can get data into cache now and that’s great. But what happens when data goes into two different cache locations. e.g. A variable x might be in the L1 D catch of Core 1 as well as Core 2. What will happen when you update that variable?

This situation is known as a data race. When multiple threads try to access the same value at same time and at least one of them is a write.

Luckily for us, CPU’s takes care of this. Whenever a write happens for a memory location in cache, all the other cache references for that location are invalidated and is fetched again from the memory by the core, if required. However, since the unit of cache is a cache line, the whole cache line will be goner rather than only required bytes.

This creates new problems for Multithreaded systems. When two or more threads try to simultaneously modify bytes falling on the same cache line, most of the time is went into invalidating the cache and reading the updated bytes from the memory. This effect is known as False sharing.

If you have suspicious lack of scalability, you might be suffering from this effect.

The easiest way to tackle this is to always operate on local variables inside a thread and then once the processing is done, use those values to update a global state.

Cache associativity

Developers generally don’t need to pay attention to this. If you want you can skip this section.

A cache is divided into a number of sets.

Each set can hold a number of cache lines.

Caches which can hold 1 cache line per set are called directly mapped cache.

Caches which can hold all the cache lines per set are called fully associative cache.

Directly mapped cache are not performant and fully associative caches are costly for practical systems, so a typical CPU has an E way set-associative cache. This means a set can hold E cache lines.

On my laptop, the cache is 8 way associative. The L3 cache is 3MB in size and a cache line is 64 bytes.

This means my cache has roughly 3MB/(8*64 bytes) = 6144 sets. (Cache size ( C ) is equal to S * E * B where S = no. of sets, E= no. of cache lines in each set and B = no. of bytes in each cache line).

I said roughly because I haven’t accounted for tag and the valid bits.

What this means is that, if I have addresses that differ by 64 * 6144 = 393216 bytes they are going to fall in the same set.

If we frequently access such addresses more than 8 times, the values will keep on competing for cache lines in a set (because it’s 8-way associative) and thus our program will be slower.

One of the immediate effects of this can occur when you are operating on multiple vectors simultaneously (e.g. D += A[i] * B[i] * C[i], where A & B are vectors).

Let’s assume if our cache size was 256 bytes and it was 2-way set associative and cache line size is 64 bytes). This means our cache has 2 sets (256/(2*64)).

Each vector consists of 8 byte integers and has 8 elements.

This implies values of the vectors are stored in following addresses (assuming they are placed continuously)

A -> (0, 120), B ->(128, 248), C-> (256, 376)

So, the 64 bytes blocks of each array are gonna compete with each other to occupy the same set. but since our set can only hold 2 such blocks, the latter is going to replace the former and the cpu will have to go to memory again to fetch the next element.

This is a waste of CPU time and should be avoided. You can add some padding (e.g. 64 bytes) so that these values don’t compete with each other for same set.

Utilise cache to the fullest potential

Here are the tips to take cache performance to the maximum.

- Maintain spatial and temporal locality of data. Repeated references to a local variable are good as it’ll be in cache. Also, accessing memory in a sequential manner means utilising the already loaded words in cache line and thus lowering the cache miss rate.

- Identify suspicious lake of scalability and write to local variables instead of global ones.

- Avoid unnecessary memory allocations. Memory is costly and it’s access is not as fast as it seems. Don’t unnecessarily copy objects from one place to another (unless you are dealing with immutable objects in functional programming).

In part 2, I’ll be telling about another aspect of memory which is it’s runtime/compile time management.

Here are some resources I used to learn and validate these concepts:

Connect with me on LinkedIn or Facebook or drop a mail to kharekartik@gmail.com to share the feedback.

✉️ Subscribe to CodeBurst’s once-weekly Email Blast, 🐦 Follow CodeBurst on Twitter, view 🗺️ The 2018 Web Developer Roadmap, and 🕸️ Learn Full Stack Web Development.