Member-only story

KUBERNETES

Watch Your Kubernetes Cluster Events With EventRouter and Kafka

Record what happens in your Kubernetes cluster

You are certainly familiar with Kubernetes events, especially when you investigate a dysfunction in your cluster using the infamous kubectl describe command or the event API resource. It’s a goldmine of information.

$ kubectl get events15m Warning FailedCreate replicaset/ml-pipeline-visualizationserver-865c7865bc Error creating: pods "ml-pipeline-visualizationserver-865c7865bc-" is forbidden: error looking up service account default/default-editor: serviceaccount "default-editor" not found

However useful this information is, it is only temporary. The retention period is generally between 5 minutes and 30 days. You may want to keep this precious information for auditing purposes or ulterior diagnosis in more durable and efficient storage like Kafka. You can then react to certain events by having a tool (e.g. Argo Events) or your own applications subscribing to a Kafka topic.

In this article, I will show you how to build such a pipeline for processing and storing Kubernetes cluster events.

What We Want to Build

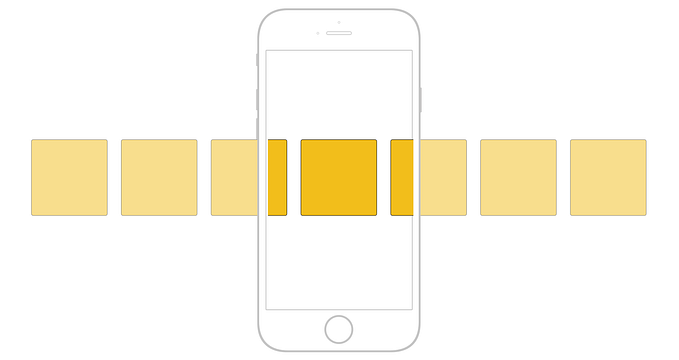

We will build a whole Kubernetes events processing chain. The main components are:

- Eventrouter from HeptioLab, a Kubernetes event handler that consolidates all the cluster events into various sinks among which a Kafka topic.

- Strimzi operator as an easy way to manage Kafka brokers in Kubernetes.

- A custom Go binary to distribute the events in their target Kafka topics.

Why do we want to distribute the events into different topics? Let’s say for example that in each namespace of your cluster you have Kubernetes assets related to a specific customer. You clearly want to segregate the customer events from each other before using them.

All the configurations, source code, and detailed setup instructions may be found in this GitHub repository.